I’m trying to compile an ONNX model with TVM on CUDA GPU. When I enable the cublas BYOC, the saved file’s size doubles. Here is the script I use, for simplicity, I did not enable auto/meta scheduler and simply use the default schedule.

import argparse

import os.path as osp

import onnx

import tvm

from tvm import relay

def run(prefix):

onnx_model = onnx.load(osp.join(prefix, "model.onnx"))

mod, params = relay.frontend.from_onnx(onnx_model)

if args.cublas:

from tvm.relay.op.contrib.cublas import pattern_table

seq = tvm.transform.Sequential(

[

relay.transform.InferType(),

relay.transform.MergeComposite(pattern_table()),

relay.transform.AnnotateTarget("cublas"),

relay.transform.PartitionGraph(bind_constants=False),

relay.transform.InferType(),

]

)

mod = seq(mod)

with tvm.transform.PassContext(3):

factory = relay.build(mod, tvm.target.cuda(arch="sm_70"), params=params)

factory.export_library(osp.join(prefix, "model.so"))

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument('prefix', type=str)

parser.add_argument('--cublas', action="store_true")

args = parser.parse_args()

run(args.prefix)

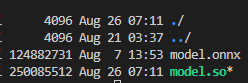

After running, I get a shared lib that is twice the size of the original onnx file.

I also tried to save the lib, params and graph_json seperately, but the total size does not change. But if I don’t use the cublas BYOC, the saved .so file size can match the onnx file size correctly. What is the possible reason for this?