I wrote the GPU code of softmax cross entropy, but I can’t build it correctly. What’s the problem This is my code:

import numpy as np

import tvm

from dlsys2018 import autodiff, tvm_op

tgt_host="llvm"

tgt="cuda"

dtype = "float32"

ctx = tvm.context(tgt, 0)

def make_matrix_softmax_cross_entropy(shape, tgt, tgt_host, func_name,

dtype="float32"):

A_=te.placeholder(shape,dtype=dtype,name="A_")

A=te.placeholder(shape,dtype=dtype,name="A")

k = te.reduce_axis((0, A.shape[1]), name="k")

A_max = te.compute((A.shape[0],), lambda i: te.max(A[i, k], axis=k))

A_ex = te.compute(shape, lambda i, j: te.exp(A[i, j] - A_max[i]))

k1 = te.reduce_axis((0, A.shape[1]), name="k1")

A_ex_sum = te.compute((A.shape[0],), lambda i: te.sum(A_ex[i, k1], axis=k1))

A_logsoftmax = te.compute(shape, lambda i, j: te.log(A_ex[i, j] / A_ex_sum[i]))

k2=te.reduce_axis((0,shape[1]),name="k2")

A_logsoftmax_sum=te.compute((shape[0],0),lambda i:te.sum(A_logsoftmax[i,k2]*A_[i,k2],axis=k2))

k3=te.reduce_axis((0,shape[0]),name="k3")

B=te.compute((1,),lambda i: te.sum(-A_logsoftmax_sum[k3],axis = k3))

B1=te.compute((1,), lambda i: B[i] / shape[0])

s=te.create_schedule(B1.op)

if tgt=="cuda":

#I'dont know why it can't work?

s[B].bind(k3,te.thread_axis("threadIdx.x"))

s[A_logsoftmax_sum].bind(A_logsoftmax_sum.op.axis[0], te.thread_axis("threadIdx.x"))

s[A_logsoftmax].bind(A_logsoftmax.op.axis[0], te.thread_axis("threadIdx.x"))

s[A_ex_sum].bind(A_ex_sum.op.axis[0], te.thread_axis("threadIdx.x"))

s[A_ex].bind(A_ex.op.axis[0], te.thread_axis("threadIdx.x"))

s[A_max].bind(A_max.op.axis[0], te.thread_axis("threadIdx.x"))

# print(tvm.lower(s, [A, A_,B1], simple_mode=True))

f=tvm.build(s,[A,A_,B1],tgt,tgt_host,name=func_name)

return f

def test_softmax_cross_entropy():

shape = (400, 1000)

y = np.random.uniform(-5, 5, shape).astype(dtype)

y_ = np.random.uniform(-5, 5, shape).astype(dtype)

out = np.zeros((1,)).astype(dtype)

arr_y = tvm.nd.array(y, ctx=ctx)

arr_y_ = tvm.nd.array(y_, ctx=ctx)

arr_out = tvm.nd.array(out, ctx=ctx)

matrix_softmax_cross_entropy = tvm_op.make_matrix_softmax_cross_entropy(shape, tgt, tgt_host, "softmax_cross_entropy")

matrix_softmax_cross_entropy(arr_y, arr_y_, arr_out)

out = arr_out.asnumpy()

# numpy calculation

cross_entropy = np.mean(

-np.sum(y_ * np.log(autodiff.softmax_func(y)), axis=1), keepdims=True)

np.testing.assert_allclose(cross_entropy, out, rtol=1e-5)

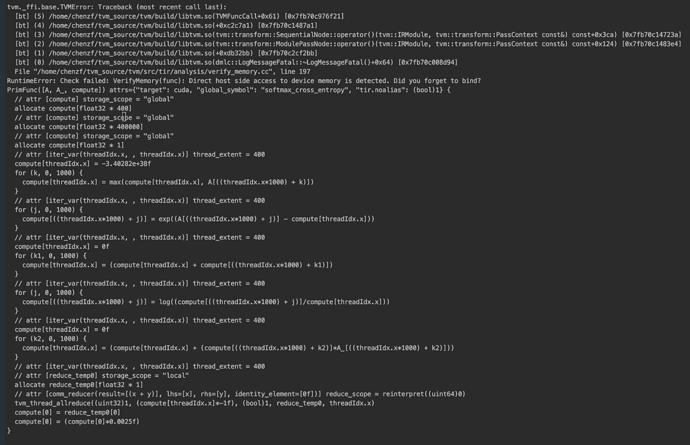

The mistake comes from this sentence:

f=tvm.build(s,[A,A_,B1],tgt,tgt_host,name=func_name)

And This is the screenshot of this error:

please help me!! Thank you!