from pytorch_pretrained_bert import BertForMaskedLM

import torch

def main(args):

bert_model_origin = BertForMaskedLM.from_pretrained("bert-large-uncased")

example_tensor = torch.randint(0, 100, (1, 256))

model_int8 = torch.quantization.quantize_dynamic(bert_model_origin, quant_layers={torch.nn.Linear}, dtype=torch.qint8)

model_int8.eval()

trace_model = torch.jit.trace(model_int8, [example_tensor])

trace_model.eval()

shape_list = [(i.debugName().split('.')[0], i.type().sizes()) for i in list(trace_model.graph.inputs())[1:]]

mod_bert, params_bert = tvm.relay.frontend.pytorch.from_pytorch(trace_model, shape_list)

target = tvm.target.Target(target="llvm", host="llvm")

with tvm.transform.PassContext(opt_level=3):

lib = relay.build(mod_bert, target=target, params=params_bert)

lib.export_library(os.path.realpath("net_int18_cpu.tar"))

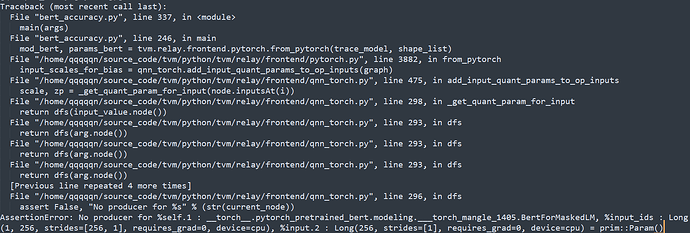

see code above, when build pre-quantization bert-large masked lm model, it will a failure like this:

then i find it’s find aten::mean’s quantized weight when dfs traverse to the root but find nothing.

then i comment one line in qnn_torch.py,

num_quantized_inputs = {

"quantized::conv2d": 1,

"quantized::conv2d_relu": 1,

...

"aten::dequantize": 1,

# "aten::mean": 1,

"aten::upsample_nearest2d": 1,

"aten::upsample_bilinear2d": 1,

"aten::relu_": 1,

...

"aten::hardsigmoid": 1,

"quantized::conv_transpose2d": 1,

}

it got successed. so what’s wrong with this?