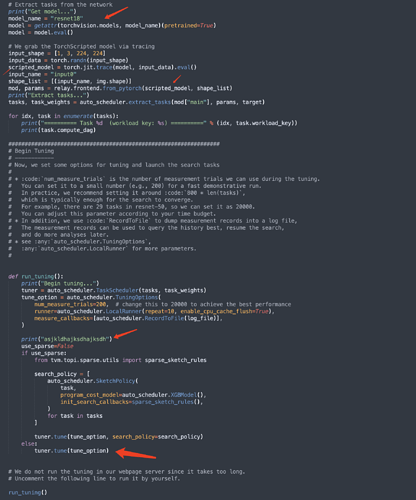

Hi Comaniac, below is my complete script. I have removed log_file but it stil doesn’t work.

import numpy as np

import tvm

from tvm import relay, auto_scheduler

from tvm.relay import data_dep_optimization as ddo

import tvm.relay.testing

from tvm.contrib import graph_executor

from tvm.contrib.download import download_testdata

import torch

import torchvision

from PIL import Image

img_url = "https://github.com/dmlc/mxnet.js/blob/main/data/cat.png?raw=true"

img_path = download_testdata(img_url, "cat.png", module="data")

img = Image.open(img_path).resize((224, 224))

# Preprocess the image and convert to tensor

from torchvision import transforms

my_preprocess = transforms.Compose(

[

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

]

)

img = my_preprocess(img)

img = np.expand_dims(img, 0)

use_sparse = False

target = tvm.target.Target("llvm", host="llvm")

dtype = "float32"

log_file = "demo-torch-resnet18.json"

# Extract tasks from the network

print("Get model...")

model_name = "resnet18"

model = getattr(torchvision.models, model_name)(pretrained=True)

model = model.eval()

# We grab the TorchScripted model via tracing

input_shape = [1, 3, 224, 224]

input_data = torch.randn(input_shape)

scripted_model = torch.jit.trace(model, input_data).eval()

input_name = "input0"

print(input_data.shape)

print(img.shape)

#shape_list = [(input_name, img.shape)]

shape_list = [(input_name, input_shape)]

mod, params = relay.frontend.from_pytorch(scripted_model, shape_list)

print("Extract tasks...")

tasks, task_weights = auto_scheduler.extract_tasks(mod["main"], params, target)

for idx, task in enumerate(tasks):

print("========== Task %d (workload key: %s) ==========" % (idx, task.workload_key))

print(task.compute_dag)

def run_tuning():

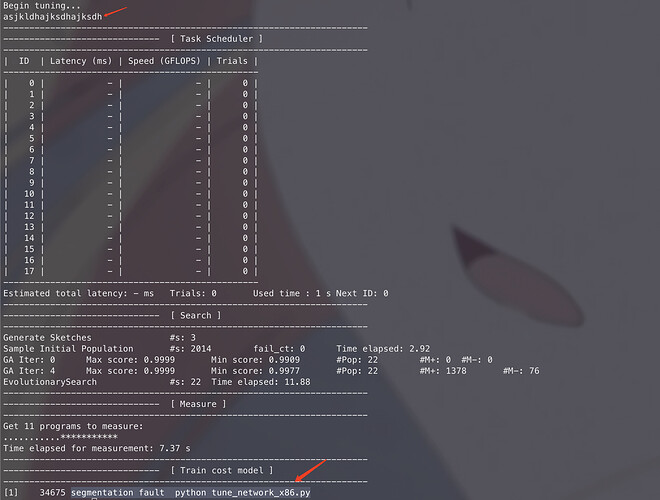

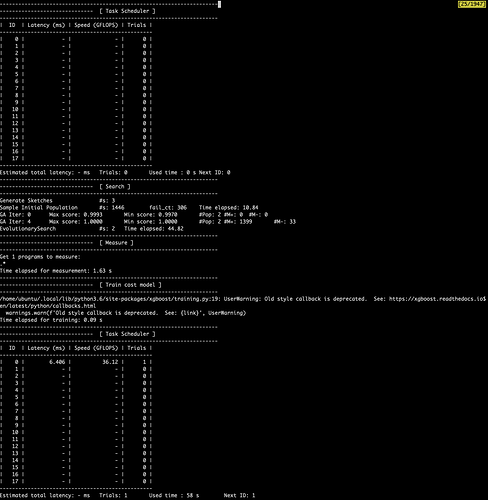

print("Begin tuning...")

tuner = auto_scheduler.TaskScheduler(tasks, task_weights)

tune_option = auto_scheduler.TuningOptions(

num_measure_trials=18, # change this to 20000 to achieve the best performance

runner=auto_scheduler.LocalRunner(repeat=10, enable_cpu_cache_flush=True),

measure_callbacks=[auto_scheduler.RecordToFile(log_file)],

)

print("asjkldhajksdhajksdh")

use_sparse=False

if use_sparse:

from tvm.topi.sparse.utils import sparse_sketch_rules

search_policy = [

auto_scheduler.SketchPolicy(

task,

program_cost_model=auto_scheduler.XGBModel(),

init_search_callbacks=sparse_sketch_rules(),

)

for task in tasks

]

tuner.tune(tune_option, search_policy=search_policy)

else:

tuner.tune(tune_option)

run_tuning()

# Compile with the history best

print("Compile...")

with auto_scheduler.ApplyHistoryBest(log_file):

with tvm.transform.PassContext(opt_level=3, config={"relay.backend.use_auto_scheduler": True}):

lib = relay.build(mod, target=target, params=params)

# Create graph executor

dev = tvm.device(str(target), 0)

module = graph_executor.GraphModule(lib["default"](dev))

data_tvm = tvm.nd.array((np.random.uniform(size=input_shape)).astype(dtype))

module.set_input("data", tvm.nd.array(img.astype(dtype)))

# Evaluate

print("Evaluate inference time cost...")

print(module.benchmark(dev, repeat=3, min_repeat_ms=500))

Any hints or helps will be appreciated sincerely!!! Thank you in advance!

Any hints or helps will be appreciated sincerely!!! Thank you in advance!