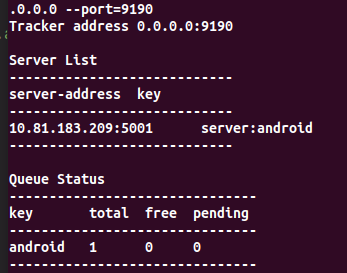

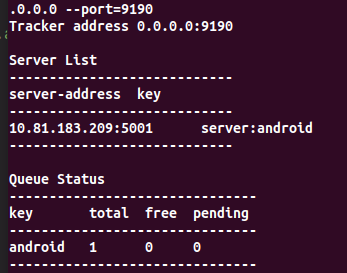

I have configured the environment, and the RPC can be used normally as follows,And tvm sample code android_rpc_test.py can run successfully.

But when I deploy ReID to android device using TVM, I get the following error.

/usr/bin/python3.8 /home/d516/wbj/project/android/tvm-android/reid/ReID_tvm_Android.py

One or more operators have not been tuned. Please tune your model for better performance. Use DEBUG logging level to see more details.

Traceback (most recent call last):

File "/home/d516/wbj/project/android/tvm-android/reid/ReID_tvm_Android.py", line 65, in <module>

module.run()

File "/home/d516/.local/lib/python3.8/site-packages/tvm-0.8.0-py3.8-linux-x86_64.egg/tvm/contrib/graph_executor.py", line 207, in run

self._run()

File "/home/d516/.local/lib/python3.8/site-packages/tvm-0.8.0-py3.8-linux-x86_64.egg/tvm/_ffi/_ctypes/packed_func.py", line 237, in __call__

raise get_last_ffi_error()

tvm._ffi.base.TVMError: Traceback (most recent call last):

4: TVMFuncCall

3: _ZNSt17_Function_handlerIFvN3tvm7runtime7TVMArgsEPNS1_11TVMR

2: tvm::runtime::RPCWrappedFunc::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

1: tvm::runtime::RPCClientSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)

0: tvm::runtime::RPCEndpoint::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)>)

File "/home/d516/wbj/project/android/tvm-0.8/src/runtime/rpc/rpc_endpoint.cc", line 801

TVMError:

---------------------------------------------------------------

An error occurred during the execution of TVM.

For more information, please see: https://tvm.apache.org/docs/errors.html

---------------------------------------------------------------

Check failed: (code == RPCCode::kReturn) is false: code=kShutdown

free(): invalid pointer

进程已结束,退出代码为 134 (interrupted by signal 6: SIGABRT)

This my code,I have marked the error code location in the code, and the error location is at “module.run()”

import argparse

import os

import sys

import tvm

import tvm.relay as relay

from tvm import rpc

from tvm.contrib import utils, ndk, graph_executor as runtime

from common import *

from models.model import BNNproAtt_IBN

path = sys.path[0]

parser = argparse.ArgumentParser('wbj_reid_tvm')

parser.add_argument('--channel', type=int, default=3)

parser.add_argument('--width', type=int, default=128)

parser.add_argument('--height', type=int, default=384)

parser.add_argument('--batchsize', type=int, default=32)

parser.add_argument('--device', type=str, default='cuda')

parser.add_argument('--torch_weights', type=str, default=path + '/weights/BNNproAtt0710.pt')

args = parser.parse_args()

if __name__ == '__main__':

model = BNNproAtt_IBN()

model.to(args.device)

model.load_state_dict(torch.load(args.torch_weights, map_location=args.device), False)

model.eval()

arch = "arm64"

target = tvm.target.Target("llvm -mtriple=%s-linux-android" % arch)

# ------------get tvm run lib-------------------------------

input_data, input_shape = tvm_pre_data(args.batchsize, args.channel, args.height, args.width, args.device)

scripted_model = torch.jit.trace(model, input_data).eval()

input_name = "input0"

shape_list = [(input_name, input_shape)]

mod, params = relay.frontend.from_pytorch(scripted_model, shape_list)

with tvm.transform.PassContext(opt_level=3):

lib = relay.build(mod, target=target, params=params)

tmp = utils.tempdir()

lib_fname = tmp.relpath("net.so")

local_demo = False

fcompile = ndk.create_shared if not local_demo else None

lib.export_library(lib_fname, fcompile)

# 通过RPC部署安卓设备

# tracker_host = os.environ.get("TVM_TRACKER_HOST", "127.0.0.1")

# tracker_port = int(os.environ.get("TVM_TRACKER_POST", 9190))

tracker_host = "0.0.0.0"

tracker_port = int(9190)

key = "android"

tracker = rpc.connect_tracker(tracker_host, tracker_port)

remote = tracker.request(key, priority=0, session_timeout=60)

dev = remote.cpu(0)

remote.upload(lib_fname)

rlib = remote.load_module("net.so")

module = runtime.GraphModule(rlib["default"](dev))

# 执行模型推理测试

input_test = tvm.nd.array((np.random.uniform(size=input_shape)).astype("float32"))

module.set_input(input_name, input_test)

# !!!!!!!!!!!!!!!!!!!!this is error code location!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!1

module.run()

print("**Evaluate inference time cost...**")

print(module.benchmark(dev, number=1, repeat=3))

So does anyone know how to fix it?