related code:

#... some other code

target = tvm.target.Target(target="cuda", host="llvm")

device = tvm.device(target.kind.name, 0)

with tvm.transform.PassContext(opt_level=1):

func = relay.vm.compile(mod, target=target, params=params)

ctx = tvm.cuda(0)

vm = tvm.runtime.vm.VirtualMachine(func, ctx)

results = []

rays = np.load("mydatapath")

for i in range(1065):

print(i)

start = i*1420

end = start + 20

aa = rays[start: end]

print(aa.shape)

vm.set_input("main", tvm.nd.array(aa, device))

vm.invoke_stateful("main") # get error here

tvm_out = vm.get_outputs()[0]

print("tvm_out: ", len(tvm_out.asnumpy()))

results.append(tvm_out.asnumpy())

print("results: ", len(results))

result = np.stack(results)

# ... some other code to get pictures.

error:

Traceback (most recent call last):

File "test_torch.py", line 205, in <module>

vm.invoke_stateful("main")

File "/cpfs01/user/zhangyuchang/tvm_rgst_cuda/python/tvm/runtime/vm.py", line 561, in invoke_stateful

self._invoke_stateful(func_name)

File "/cpfs01/user/zhangyuchang/tvm_rgst_cuda/python/tvm/_ffi/_ctypes/packed_func.py", line 239, in __call__

raise_last_ffi_error()

File "/cpfs01/user/zhangyuchang/tvm_rgst_cuda/python/tvm/_ffi/base.py", line 476, in raise_last_ffi_error

raise py_err

tvm.error.InternalError: Traceback (most recent call last):

5: tvm::runtime::PackedFuncObj::Extractor<tvm::runtime::PackedFuncSubObj<tvm::runtime::vm::VirtualMachine::GetFunction(tvm::runtime::String const&, tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::{lambda(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)#2}> >::Call(tvm::runtime::PackedFuncObj const*, tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)

4: tvm::runtime::vm::VirtualMachine::GetFunction(tvm::runtime::String const&, tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::{lambda(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)#1}::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const [clone .isra.0]

3: tvm::runtime::vm::VirtualMachine::Invoke(tvm::runtime::vm::VMFunction const&, std::vector<tvm::runtime::ObjectRef, std::allocator<tvm::runtime::ObjectRef> > const&)

2: tvm::runtime::vm::VirtualMachine::RunLoop(std::vector<long, std::allocator<long> > const&)

1: tvm::runtime::vm::VirtualMachine::InvokePacked(long, tvm::runtime::PackedFunc const&, long, long, std::vector<tvm::runtime::ObjectRef, std::allocator<tvm::runtime::ObjectRef> > const&)

0: tvm::runtime::PackedFuncObj::Extractor<tvm::runtime::PackedFuncSubObj<tvm::runtime::WrapPackedFunc(int (*)(TVMValue*, int*, int, TVMValue*, int*, void*), tvm::runtime::ObjectPtr<tvm::runtime::Object> const&)::{lambda(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)#1}> >::Call(tvm::runtime::PackedFuncObj const*, tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)

File "/cpfs01/user/zhangyuchang/tvm_rgst_cuda/src/runtime/library_module.cc", line 76

InternalError: Check failed: ret == 0 (-1 vs. 0)

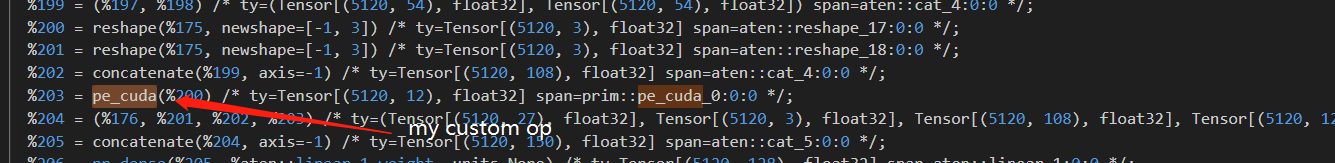

What could be the possible reasons? Is my CUDA operator not registered correctly in TVM? I can already see my custom operator in the Relay IR.