I am confused why relay.floor_mod allows variable of type float64 as parameter. So I wrote the following script:

import tvm

from tvm import relay

from tvm.contrib import graph_runtime

import numpy as np

def vmobj_to_list(o, dtype="float64"):

if isinstance(o, tvm.nd.NDArray):

return [o]

elif isinstance(o, tvm.runtime.container.ADT):

result = []

for f in o:

result.extend(vmobj_to_list(f, dtype))

return result

else:

return o

var_0 = relay.var("var_0", dtype = "float64", shape = ())

var_1 = relay.exp(var_0)

const_2 = relay.const([787.644532], dtype="float64")

var_3 = relay.floor_mod(var_1, const_2)

tuple = relay.Tuple([var_3])

F = relay.Function([var_0], tuple)

mod = tvm.IRModule()

mod['main'] = F

mod = relay.transform.InferType()(mod)

graph, lib, params = relay.build(mod, target='llvm')

module = graph_runtime.create(graph, lib, tvm.device('llvm',0))

intrp = relay.build_module.create_executor('graph', mod, tvm.device('cuda',0),'cuda')

input_0= np.array(415.748715, dtype='float64')

module.set_input('var_0', input_0)

module.set_input(**params)

module.run()

res0_0 = module.get_output(0).asnumpy()

res1 = intrp.evaluate()(input_0)

res1 = vmobj_to_list(res1)

res1_0 = res1[0].asnumpy()

np.testing.assert_allclose(res0_0 ,res1_0, atol=1e-3, rtol=1e-3)

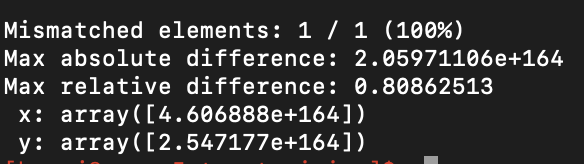

A difference between res0_0 and res1_0 then was caught by assert_allclose. I think it’s due to the fact that floor_mod on float64 is undefined, which would be interpreted in different ways by LLVM and CUDA. If it’s the case, why TVM still allow floor_mod to take float64 variable as parameter?

BTW, this is the message thrown by np.testing.assert_allclose(res0_0 ,res1_0, atol=1e-3, rtol=1e-3):