Yes, after several attempt building and rebuilding I was able to make it work for the Ultra-96, haven’t been that lucky with the PYNQ-Z2 board. Thanks all the same for the quick response

Any update on this issue? I was trying to rebuild multiple times from pynq side, but could not get it to work

open the runtime in config.cmake on board and re-run tvm.

Hi I am still struggling with this issue:

Running a pynq-z2 with 2.6.0 On host and device using TVM v0.7.0 (728b82957)

Output on device: > INFO:root:Loading VTA library: /home/xilinx/tvm/build/libvta.so

INFO:RPCServer:load_module /tmp/tmpjsunht7f/conv2d.o INFO:root:Loading VTA library: /home/xilinx/tvm/build/libvta.so INFO:RPCServer:load_module /tmp/tmpjsunht7f/conv2d.o INFO:root:Loading VTA library: /home/xilinx/tvm/build/libvta.so INFO:RPCServer:load_module /tmp/tmpjsunht7f/conv2d.o Process Process-1:5: Traceback (most recent call last): File "/usr/lib/python3.6/multiprocessing/process.py", line 258, in _bootstrap self.run() File "/usr/lib/python3.6/multiprocessing/process.py", line 93, in run self._target(*self._args, **self._kwargs) File "/home/xilinx/tvm/python/tvm/rpc/server.py", line 118, in _serve_loop _ffi_api.ServerLoop(sockfd) File "/home/xilinx/tvm/python/tvm/_ffi/_ctypes/packed_func.py", line 237, in __call__ raise get_last_ffi_error() AttributeError: Traceback (most recent call last): [bt] (4) /home/xilinx/tvm/build/libtvm_runtime.so(TVMFuncCall+0x37) [0xb567280c] [bt] (3) /home/xilinx/tvm/build/libtvm_runtime.so(+0x898fc) [0xb56cd8fc] [bt] (2) /home/xilinx/tvm/build/libtvm_runtime.so(tvm::runtime::RPCServerLoop(int)+0x6b) [0xb56cd7a0] [bt] (1) /home/xilinx/tvm/build/libtvm_runtime.so(tvm::runtime::RPCEndpoint::ServerLoop()+0x125) [0xb56bb79a] [bt] (0) /home/xilinx/tvm/build/libtvm_runtime.so(+0x2c66c) [0xb567066c] File "/home/xilinx/tvm/python/tvm/_ffi/_ctypes/packed_func.py", line 81, in cfun rv = local_pyfunc(*pyargs) File "/home/xilinx/tvm/vta/python/vta/exec/rpc_server.py", line 85, in server_shutdown runtime_dll[0].VTARuntimeShutdown() File "/usr/lib/python3.6/ctypes/__init__.py", line 361, in __getattr__ func = self.__getitem__(name) File "/usr/lib/python3.6/ctypes/__init__.py", line 366, in __getitem__ func = self._FuncPtr((name_or_ordinal, self)) AttributeError: /home/xilinx/tvm/build/libvta.so: undefined symbol: VTARuntimeShutdown INFO:RPCServer:connection from ('192.168.178.21', 55061) INFO:root:Generating grammar tables from /usr/lib/python3.6/lib2to3/Grammar.txt INFO:root:Generating grammar tables from /usr/lib/python3.6/lib2to3/PatternGrammar.txt INFO:root:Program FPGA with 1x16_i8w8a32_15_15_18_17.bit INFO:root:Skip reconfig_runtime due to same config. INFO:root:Loading VTA library: /home/xilinx/tvm/build/libvta.so INFO:RPCServer:load_module /tmp/tmptelh1dui/conv2d.o INFO:root:Loading VTA library: /home/xilinx/tvm/build/libvta.so INFO:root:Loading VTA library: /home/xilinx/tvm/build/libvta.so

Then repeated loads until crashing.

On host it’s the same error as before.

I rebuilt and cleaned multiple times without success. What exactly is the issue? Like why is make clean supposed to help?

Hi, I managed to solve this on PYNQ-Z2 v2.6.0 and TVM 0.8.dev0.

On the PYNQ board

After a fresh git clone:

Define a home directory (I was using the Jupyter terminal this might be already set if you use ssh):

export HOME=/home/xilinx

Then add a couple of other variables to .bashrc

export TVM_HOME=/path/to/tvm

export PYTHONPATH=$TVM_HOME/python:$TVM_HOME/vta/python:$PYTHONPATH

export VTA_HW_PATH=$TVM_HOME/3rdparty/vta_hw

Then run source .bashrc

Finally, just follow https://tvm.apache.org/docs/vta/install.html#pynq-side-rpc-server-build-deployment

The important part is to build two times:

make runtime vta -j2

# FIXME (tmoreau89): remove this step by fixing the cmake build

make clean; make runtime vta -j2

test_benchmark_topi_conv2 and deploy_classification then work perfectly.

Hi denishem, thanks for the response. I finally managed to get it to run. For me, I ran this at least 4 times, but wasn’t sure what the issue was that I tried different TVM versions and all.

However, the issue is not related with the TVM code (with 0.7.0). From my understanding, it has something to do with limited resources of the pynq that it cannot build the whole thing in one run. That’s why building multiple times helps until the whole vta lib can be built. For other people, you should see a bunch more

make[4]: Entering directory '/home/xilinx/tvm/build'

make[4]: Leaving directory '/home/xilinx/tvm/build'

than when building on the first try to be sure, that it’s working.

Still using 2.6.0 and v0.7.0

Finally, can get started with this

Any update about this problem??

Hello @thierry,

I have the same problem with libvta.so building on de10nano target. After building with

$ make runtime vta -j2

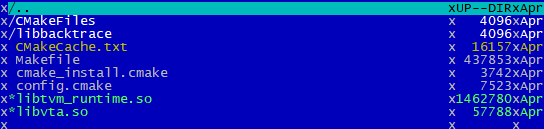

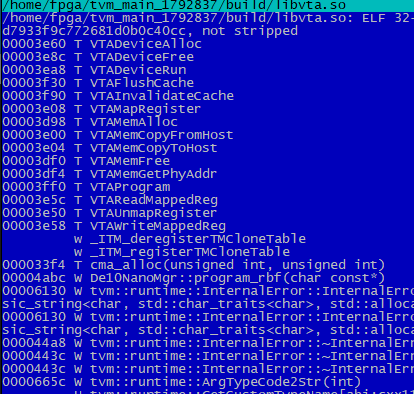

or after rebuilding the runtime with the RPC server symbol VTARuntimeShutdown is not present at libvta.so (and suggested fix $ make clean; make runtime vta -j2 several times is not take effect):

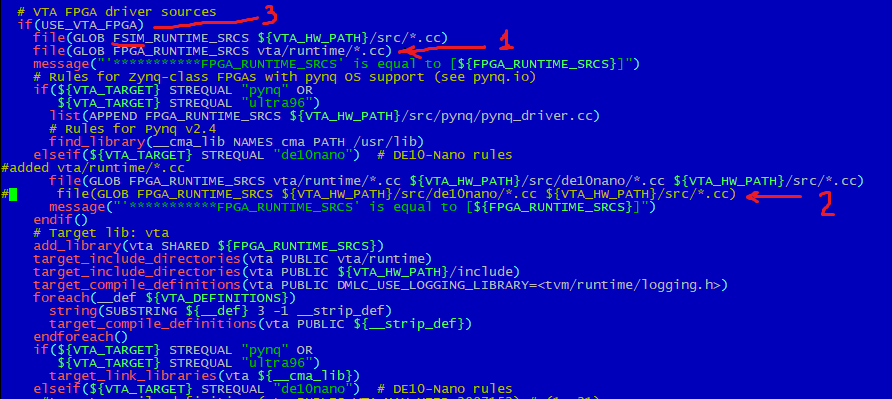

VTARuntimeShutdown is in tvm/vta/runtime/runtime.cc, so I edited VTA.cmake, because it seems that for de10nano target runtime.cc finally have not been presented at FPGA_RUNTIME_SRCS (1 and 2 marks). With this fix libvta.so builds 2x larger than earlier and VTARuntimeShutdown is present.

Also it seems there is a typo in VTA.cmake (see 3rd mark) - FSIM_RUNTIME_SRCS, but it doesn’t matter, ${VTA_HW_PATH}/src doesn’t contain any .cc files

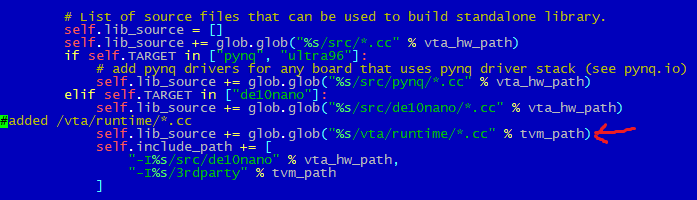

Since after the RPC server rebuilds the runtime, VTARuntimeShutdown also disappears from the libvta.so, I added a similar fix to \3rdparty\vta-hw\config\pkg_config.py:

Please tell me if the things I found are really errors in the VTA.cmake and pkg_config.py?

The fact is that now various tests fail with the same error. I doubt that the reason is in the how libvta was builded, but I still want to make sure.

(~$ python3 /home/arinan/tvm_main_1792837/vta/tutorials/test_program_rpc.py is successful, bitstream is successfully downloaded to FPGA)

There is error log on the host side:

~$ python3 /home/arinan/tvm_main_1792837/vta/tutorials/matrix_multiply.py

primfn(A_1: handle, B_1: handle, C_1: handle) -> ()

attr = {"global_symbol": "main", "tir.noalias": True}

buffers = {C: Buffer(C_2: Pointer(int8), int8, [1, 16, 1, 16], []),

A: Buffer(A_2: Pointer(int8), int8, [1, 16, 1, 16], []),

B: Buffer(B_2: Pointer(int8), int8, [16, 16, 16, 16], [])}

buffer_map = {A_1: A, B_1: B, C_1: C} {

attr [A_buf: Pointer(int8)] "storage_scope" = "global";

allocate(A_buf, int8, [256]);

attr [B_buf: Pointer(int8)] "storage_scope" = "global";

allocate(B_buf, int8, [65536]);

attr [C_buf: Pointer(int32)] "storage_scope" = "global";

allocate(C_buf, int32, [256]) {

for (i1: int32, 0, 16) {

for (i3: int32, 0, 16) {

A_buf[((i1*16) + i3)] = (int8*)A_2[((i1*16) + i3)]

}

}

for (i0: int32, 0, 16) {

for (i1_1: int32, 0, 16) {

for (i2: int32, 0, 16) {

for (i3_1: int32, 0, 16) {

B_buf[((((i0*4096) + (i1_1*256)) + (i2*16)) + i3_1)] = (int8*)B_2[((((i0*4096) + (i1_1*256)) + (i2*16)) + i3_1)]

}

}

}

}

for (co: int32, 0, 16) {

for (ci: int32, 0, 16) {

C_buf[((co*16) + ci)] = 0

for (ko: int32, 0, 16) {

for (ki: int32, 0, 16) {

C_buf[((co*16) + ci)] = ((int32*)C_buf[((co*16) + ci)] + (cast(int32, (int8*)A_buf[((ko*16) + ki)])*cast(int32, (int8*)B_buf[((((co*4096) + (ko*256)) + (ci*16)) + ki)])))

}

}

}

}

for (i1_2: int32, 0, 16) {

for (i3_2: int32, 0, 16) {

C_2[((i1_2*16) + i3_2)] = cast(int8, (int32*)C_buf[((i1_2*16) + i3_2)])

}

}

}

}

primfn(A_1: handle, B_1: handle, C_1: handle) -> ()

attr = {"global_symbol": "main", "tir.noalias": True}

buffers = {C: Buffer(C_2: Pointer(int8), int8, [1, 16, 1, 16], []),

A: Buffer(A_2: Pointer(int8), int8, [1, 16, 1, 16], []),

B: Buffer(B_2: Pointer(int8), int8, [16, 16, 16, 16], [])}

buffer_map = {A_1: A, B_1: B, C_1: C} {

attr [C_buf: Pointer(int32)] "storage_scope" = "local.acc_buffer";

allocate(C_buf, int32, [256]);

attr [A_buf: Pointer(int8)] "storage_scope" = "local.inp_buffer";

allocate(A_buf, int8, [16]);

attr [B_buf: Pointer(int8)] "storage_scope" = "local.wgt_buffer";

allocate(B_buf, int8, [16]) {

for (co: int32, 0, 16) {

for (ci: int32, 0, 16) {

C_buf[((co*16) + ci)] = 0

for (ko: int32, 0, 16) {

attr [IterVar(i0: int32, (nullptr), "DataPar", "")] "pragma_dma_copy" = 1;

for (i3: int32, 0, 16) {

A_buf[i3] = (int8*)A_2[((ko*16) + i3)]

}

attr [IterVar(i0_1: int32, (nullptr), "DataPar", "")] "pragma_dma_copy" = 1;

for (i3_1: int32, 0, 16) {

B_buf[i3_1] = (int8*)B_2[((((co*4096) + (ko*256)) + (ci*16)) + i3_1)]

}

for (ki: int32, 0, 16) {

C_buf[((co*16) + ci)] = ((int32*)C_buf[((co*16) + ci)] + (cast(int32, (int8*)A_buf[ki])*cast(int32, (int8*)B_buf[ki])))

}

}

}

}

attr [IterVar(i0_2: int32, (nullptr), "DataPar", "")] "pragma_dma_copy" = 1;

for (i1: int32, 0, 16) {

for (i3_2: int32, 0, 16) {

C_2[((i1*16) + i3_2)] = cast(int8, (int32*)C_buf[((i1*16) + i3_2)])

}

}

}

}

primfn(A_1: handle, B_1: handle, C_1: handle) -> ()

attr = {"global_symbol": "main", "tir.noalias": True}

buffers = {C: Buffer(C_2: Pointer(int8), int8, [1, 16, 1, 16], []),

A: Buffer(A_2: Pointer(int8), int8, [1, 16, 1, 16], []),

B: Buffer(B_2: Pointer(int8), int8, [16, 16, 16, 16], [])}

buffer_map = {A_1: A, B_1: B, C_1: C} {

attr [C_buf: Pointer(int32)] "storage_scope" = "local.acc_buffer";

attr [A_buf: Pointer(int8)] "storage_scope" = "local.inp_buffer";

attr [B_buf: Pointer(int8)] "storage_scope" = "local.wgt_buffer" {

attr [IterVar(vta: int32, (nullptr), "ThreadIndex", "vta")] "coproc_scope" = 2 {

attr [IterVar(vta, (nullptr), "ThreadIndex", "vta")] "coproc_uop_scope" = "VTAPushGEMMOp" {

@tir.call_extern("VTAUopLoopBegin", 16, 1, 0, 0, dtype=int32)

@tir.vta.uop_push(0, 1, 0, 0, 0, 0, 0, 0, dtype=int32)

@tir.call_extern("VTAUopLoopEnd", dtype=int32)

}

@tir.vta.coproc_dep_push(2, 1, dtype=int32)

}

for (ko: int32, 0, 16) {

attr [IterVar(vta, (nullptr), "ThreadIndex", "vta")] "coproc_scope" = 1 {

@tir.vta.coproc_dep_pop(2, 1, dtype=int32)

@tir.call_extern("VTALoadBuffer2D", @tir.tvm_thread_context(@tir.vta.command_handle(, dtype=handle), dtype=handle), A_2, ko, 1, 1, 1, 0, 0, 0, 0, 0, 2, dtype=int32)

@tir.call_extern("VTALoadBuffer2D", @tir.tvm_thread_context(@tir.vta.command_handle(, dtype=handle), dtype=handle), B_2, ko, 1, 16, 16, 0, 0, 0, 0, 0, 1, dtype=int32)

@tir.vta.coproc_dep_push(1, 2, dtype=int32)

}

attr [IterVar(vta, (nullptr), "ThreadIndex", "vta")] "coproc_scope" = 2 {

@tir.vta.coproc_dep_pop(1, 2, dtype=int32)

attr [IterVar(vta, (nullptr), "ThreadIndex", "vta")] "coproc_uop_scope" = "VTAPushGEMMOp" {

@tir.call_extern("VTAUopLoopBegin", 16, 1, 0, 1, dtype=int32)

@tir.vta.uop_push(0, 0, 0, 0, 0, 0, 0, 0, dtype=int32)

@tir.call_extern("VTAUopLoopEnd", dtype=int32)

}

@tir.vta.coproc_dep_push(2, 1, dtype=int32)

}

}

@tir.vta.coproc_dep_push(2, 3, dtype=int32)

@tir.vta.coproc_dep_pop(2, 1, dtype=int32)

attr [IterVar(vta, (nullptr), "ThreadIndex", "vta")] "coproc_scope" = 3 {

@tir.vta.coproc_dep_pop(2, 3, dtype=int32)

@tir.call_extern("VTAStoreBuffer2D", @tir.tvm_thread_context(@tir.vta.command_handle(, dtype=handle), dtype=handle), 0, 4, C_2, 0, 16, 1, 16, dtype=int32)

}

@tir.vta.coproc_sync(, dtype=int32)

}

}

Traceback (most recent call last):

File "/home/arinan/tvm_main_1792837/vta/tutorials/matrix_multiply.py", line 438, in <module>

f(A_nd, B_nd, C_nd)

File "/home/arinan/tvm_main_1792837/python/tvm/runtime/module.py", line 115, in __call__

return self.entry_func(*args)

File "/home/arinan/tvm_main_1792837/python/tvm/_ffi/_ctypes/packed_func.py", line 237, in __call__

raise get_last_ffi_error()

tvm._ffi.base.TVMError: Traceback (most recent call last):

7: TVMFuncCall

at /home/arinan/tvm_main_1792837/src/runtime/c_runtime_api.cc:480

6: tvm::runtime::PackedFunc::CallPacked(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /home/arinan/tvm_main_1792837/include/tvm/runtime/packed_func.h:1150

5: std::function<void (tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*)>::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /usr/include/c++/7/bits/std_function.h:706

4: _M_invoke

at /usr/include/c++/7/bits/std_function.h:316

3: operator()

at /home/arinan/tvm_main_1792837/src/runtime/rpc/rpc_module.cc:278

2: tvm::runtime::RPCWrappedFunc::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const

at /home/arinan/tvm_main_1792837/src/runtime/rpc/rpc_module.cc:127

1: tvm::runtime::RPCClientSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)

at /home/arinan/tvm_main_1792837/src/runtime/rpc/rpc_endpoint.cc:980

0: tvm::runtime::RPCEndpoint::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)>)

at /home/arinan/tvm_main_1792837/src/runtime/rpc/rpc_endpoint.cc:797

File "/home/arinan/tvm_main_1792837/src/runtime/rpc/rpc_endpoint.cc", line 797

TVMError:

---------------------------------------------------------------

An internal invariant was violated during the execution of TVM.

Please read TVM's error reporting guidelines.

More details can be found here: https://discuss.tvm.ai/t/error-reporting/7793.

---------------------------------------------------------------

Check failed: (code == RPCCode::kReturn) is false: code=1

There is a log from the target:

INFO:root:*************TARGET:'de10nano'

INFO:root:Skip reconfig_runtime due to same config.

INFO:root:Loading VTA library: /home/fpga/tvm_main_1792837/vta/python/vta/../../../build/libvta.so

INFO:RPCServer:load_module /tmp/tmpbtuyiox3/gemm.o

[18:17:30] /home/fpga/tvm_main_1792837/3rdparty/cma/cma_api_impl.h:154: Allocating 256 bytes of contigous memory

[18:17:30] /home/fpga/tvm_main_1792837/3rdparty/cma/cma_api_impl.h:154: Allocating 65536 bytes of contigous memory

[18:17:30] /home/fpga/tvm_main_1792837/3rdparty/cma/cma_api_impl.h:154: Allocating 256 bytes of contigous memory

[18:17:30] /home/fpga/tvm_main_1792837/3rdparty/cma/cma_api_impl.h:154: Allocating 33554432 bytes of contigous memory

[18:17:30] /home/fpga/tvm_main_1792837/3rdparty/cma/cma_api_impl.h:154: Allocating 33554432 bytes of contigous memoryI followed the TVM and VTA installation guide and I encountered the same error when I ran test_benchmark_topi_conv2d.py and vta_get_started.py on pynq-z2 board. The error occured when accessing ctx(remote[0]:ext_dev(0)) in vta_get_started.py. It seems that tvm.rpc.RPCSession doesn’t construct extension device properly. For example, when I tried to access ctx attribute in vta_get_started.py(tvm.runtime.Device.exist), the recursion error occurred.

I have solved the problem. The reason for the error is that the RPC server of the pynq device will rebuild libvta.so. On the pynq device , it’s gets the source files that builds the library from pkg_conf.py, but some wrong settings, such as incorrect environment variables, cannot get the correct source files. The library it builds will lack some symbols, resulting in the emergence of undefined symbols and infinite recursions.

The solution is to comment out RPC_ server. py to rebuild the code of the runtime library and run it manually each time.You need to run it manually when building libvta.so

Hello,

I installed Pynq v2.7 on my board and followed this tutorial (VTA Installation Guide — tvm 0.9.dev182+ge718f5a8a documentation), I am having the error “No Module Called Pynq” on the host side, can anyone help? It appears it is due to the venv on v2.7

Hi,

I have a same problem. “no module named pynq” on command ./test_program_rpc.py

Did you solve this problem?

If you don’t mind, could you please provide more information about the issue you are facing?

Kinds,

hi,

any help to fix the RPC server problem ?

pynq z1 board

tvm 12.0

pynq z1 v3.0.1

ubuntu20.04

RPCError: Error caught from RPC call: FileNotFoundError: [Errno 2] No such file or directory: ‘/tmp/tmpmbhpp83t/1x16_i8w8a32_15_15_18_17.bit’

thank you all!!!