My target is “cuda”, and I use the first way to quantize model(Deploy a Quantized Model on Cuda — tvm 0.8.dev0 documentation)

and then inference speed is very slow, Why does this happen?Did i do something wrong??

the code is:

# ------------get test preprocess data----------------

class Reid_date:

def __init__(self, root_path, transforms):

self.transforms = transforms

self.root_path = root_path

self.images = self.get_images()

def get_images(self):

if os.path.exists(self.root_path) == True:

images = ['{}/{}'.format(self.root_path, i) for i in os.listdir(self.root_path)]

else:

raise ValueError("the img path is not exit,please check out!")

return images

def __getitem__(self, item):

path = self.images[item]

img = Image.open(path).convert('RGB')

img = self.transforms(img)

return img, path

def __len__(self):

return len(self.images)

class Data:

def __init__(self, data_path):

self.batchsize = args.batchsize

self.data_path = data_path

test_transforms = transforms.Compose([

transforms.Resize((args.height, args.width)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[ 0.229, 0.224, 0.225])

])

self.testset = Reid_date(self.data_path, test_transforms)

self.test_loader = dataloader.DataLoader(self.testset, batch_size=self.batchsize, num_workers=0)

# ------------define quantize data and return mod-----------

def calibrate_dataset():

val_data = Data(args.quantize_data_path)

for i, data in enumerate(val_data.test_loader):

if i * 32 >= 512:

break

data_temp = data[0].numpy()

yield {"data": data_temp}

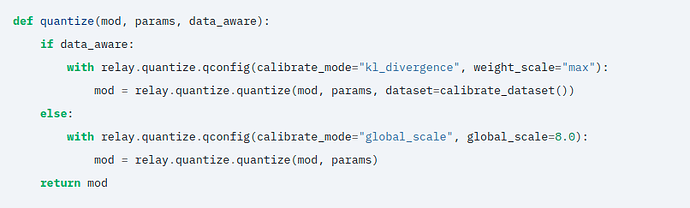

def quantize(mod, params, data_aware):

if data_aware:

with relay.quantize.qconfig(calibrate_mode="kl_divergence", weight_scale="max"):

mod = relay.quantize.quantize(mod, params, dataset=calibrate_dataset())

else:

with relay.quantize.qconfig(calibrate_mode="global_scale", global_scale=8.0):

mod = relay.quantize.quantize(mod, params)

return mod

# evaluate code

def evaluate(lib, ctx, input_shape):

module = runtime.GraphModule(lib["default"](ctx))

input_auto_schedule = tvm.nd.array((np.random.uniform(size=input_shape)).astype("float32"))

module.set_input("input0", input_auto_schedule)

# evaluate

print("**Evaluate inference time cost...**")

print(module.benchmark(ctx, number=2 ,repeat=2))

# main function

if args.use_quantize:

mod = quantize(mod, params, data_aware=True)

with tvm.transform.PassContext(opt_level=3):

lib = relay.build(mod, args.target, params=params)

# evaluate time

evaluate(lib, ctx, input_shape)

# unquantize result

# Execution time summary:

# mean (ms) median (ms) max (ms) min (ms) std (ms)

# 172.6577 171.1886 175.1060 169.2712 0.3174

# quantize result

# Execution time summary:

# mean (ms) median (ms) max (ms) min (ms) std (ms)

# 15192.1886 15192.1886 15298.1060 15086.2712 105.9174