Now that I’ve spent some time with TVM with Windows, I wanted to describe what I got working, and how in case it can help others…and maybe others can tell me what I did wrong in any case.

Compiling

This went pretty smoothly if you are already familiar with CMake. LLVM was a monster to build but went without a hitch. CMake GUI worked out of the box, just don’t forget to set your LLVM_DIR to your build directory like : build\llvm\winx64\lib\cmake\llvm

If you use GraphRuntime, there is a small issue using runtime/graph_runtime.h and linker errors. MSVC does not export symbols by default, but setting WINDOWS_EXPORT_ALL_SYMBOLS with the tvm_runtime dll, CMAKE will do this for you and fixed my linker issues.

Python

First thing I did was set my PATH to include the TVM release directory that contained the compiled tvm dlls (build\tvm\winx64\Release).

I have python 3.7.x installed, and following the directions of running “python setup.py install --user” went without a hitch.

Autotuning

This appears to be broken on Windows out of the box. You need a real linux box if you plan on doing anything with CUDA without fuss. WSL seemed to work fine out of the box with CPU autotuning.

The RPC tracker and server would not work with INADDR_ANY (0.0.0.0) and had to specifically use the local computers IP address. I had to do this on Linux also.

I was able to get autotuning to work on Windows, but it took a lot of debugging and editing, mostly of things I was unfamiliar with in Python.

For instance, the thread.Start here would deadlock and never hit the next line (the thread.join), and never ran the thread entry point. I did verify python threads did work by writing some test code, but that specific point deadlocked.

I literally went line by line, hacking up python code until nvcc kicked in and it started working.

XGBoost would not work at all (some crash about a pickle) and used gridsearch instead.

Compiling Model:

relay would get stuck in a tight, infinite loop somewhere in thread_pool.cc (constantly hitting thread yields). This was always fixed by setting the TVM_NUM_THREADS=1.

My work flow with Windows

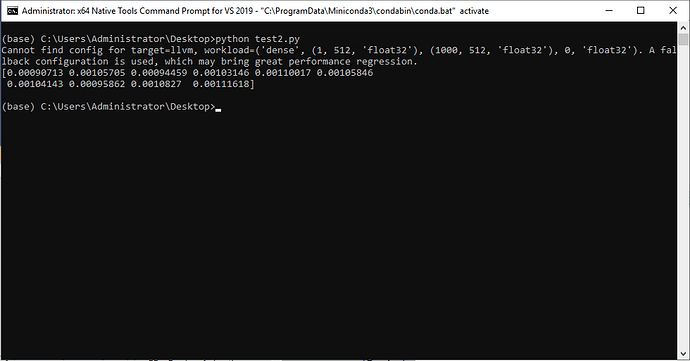

Honestly gave TVM on Windows a hard try as I need to at least compile the modules for Windows and cuda. The only reliable way is to autotune on a real Linux machine, in which the TVM docs pretty much worked as prescribed. I then copy the tuned [network].log file to my Windows machine. I set TVM_NUM_THREADS=1 (important!). Then I run the exact same script from the Linux autotune, but comment the “tune_tasks” line, skipping the problematic Windows issues, leaving me with an optimized model for Windows.

If there is a better way, I’d really love to hear it. Hopefully this can help someone else.

Thank you for this project. It’s absolutely amazing!! So far I haven’t gave it a model where I didn’t get at least 3x perf increase!