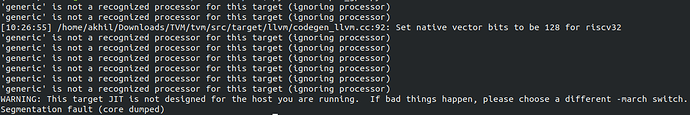

I am encountering some error when trying to use tvm to execute opencl code. The error is as follows.

and the code that generated this error

import tvm from tvm import te import numpy as np

n = te.var(“n”) A = te.placeholder((n,),name = ‘A’) B = te.placeholder((n,),name = ‘B’) C = te.compute(A.shape,lambda i: A[i] + B[i], name = ‘C’)

s = te.create_schedule(C.op)

bx, tx = s[C].split(C.op.axis[0], factor = 64)

s[C].bind(bx, te.thread_axis(“blockIdx.x”)) s[C].bind(tx, te.thread_axis(“threadIdx.x”))

fadd_cl = tvm.build(s, [A,B,C], target = “opencl”, name = “myadd”)

ctx = tvm.opencl(0)

n = 1024 a = tvm.nd.array(np.random.uniform(size=n).astype(A.dtype), ctx) b = tvm.nd.array(np.random.uniform(size=n).astype(B.dtype), ctx) c = tvm.nd.array(np.zeros(n, dtype=C.dtype), ctx) fadd_cl(a,b,c) np.testing.assert_allclose(c.asnumpy(), a.asnumpy() + b.asnumpy())