I discussed this with @tqchen, @junrushao, and @mbs-octoml. tl;dr we are broadly in agreement with this RFC and we think it can proceed.

This post will start by re-summarizing our understanding of the motivations for such an invasive IR change. Then, it will cover the controversial parts and explain the various approaches. Finally, it will summarize our opinions and conclude with our opinion of the best way forward.

This thread was incredibly long. Now that the format of the TVM Community Meeting has changed, I’d suggest we bring further discussion of large design changes like this one to those meetings for higher-bandwidth discussions.

Motivations for this Change

This RFC proposes to overhaul the way the TVM compiler is configured. The motivation behind this is to export the compiler configuration into a human-readable format (e.g. YAML) that can be consumed by a command-line tool (e.g. tvmc).

Additionally, there is a desire to place the full target configuration in the IRModule somewhere as an attribute so that it can be used in various passes (@Mousius and @manupa-arm, would be great to re-clarify this).

Classes of Configuration Affected by this Proposal

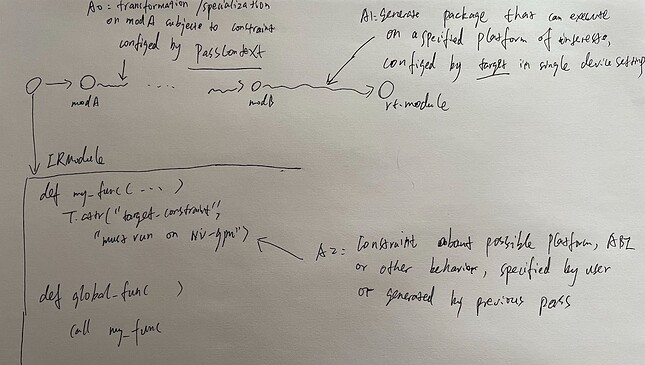

A discussion point that arose midway through this RFC is around the classification of configuration involved with this proposal. @tqchen proposed two classes:

C0. Configuration that directly specifies how some process in the compiler is carried out. It’s important to consider this in the abstract when understanding the motivations for the decisions here. In practice, it’s good to note here that in the codebase today, this roughly is PassContext.

C1. Configuration that specifies constraints on the compiler without giving a specific way to accommodate them. This configuration typically specifies properties of the deployment environment. The sentence in C0 about considering this in the abstract also applies here. In practice, it’s good to note here that in the codebase today, this roughly means Target.

Point of Clarification: this RFC is confined to C1-style config. A follow-on RFC may consider C0-style config.

What can be attached to an IRModule?

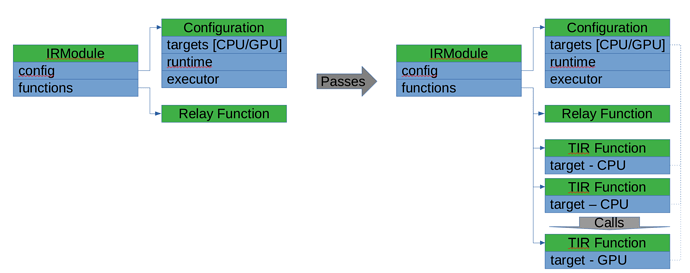

This RFC proposes that we attach the full CompilationConfig to an IRModule. Before the previous point was clarified, this was contentious. We discussed at length the question of what style of Configuration should be permitted to be attached to IRModules. The resolution was that there is consensus that C0-style confjg should not be attached to IRModules because it may create behavioral coupling between Passes which could be difficult to unit test. There is a strong desire to avoid coupling between Passes to keep them composable and retain flexibility in the compiler.

The result of this discussion was a decision that CompilationConfig itself should not be attached to an IRModule; rather, that C1-style config it contains (namely, the Target information) should be attached instead.

Why attach C1-style CompilationConfig to an IRModule?

There is one question unanswered in the previous section: what is the motivation for attaching C1-style CompilationConfig to IRModule? There are two points to make here:

- There was a need by ARM folks to reference the Target from some passes [@mousius @manupa-arm it has now been so long since we discussed this I have forgotten which one required this—feel free to add it in]. Target is an object currently passed around the compiler on the stack as necessary. Last year, @jroesch began an effort to attach all of this “extra” (e.g. stack-passed information, or information tracked in flow-level compiler classes) to the IRModule during compilation. Target is yet another instance of this, so attaching it to the IRModule is the medium-term correct way to expose it to the pass ARM is trying to write.

- The ultimate goal of this RFC is to expose the compiler’s configuration to

tvmc users in a form that could be edited, serialized, and deserialized without needing to write Python or have a copy of the TVM source code. Since tvmc users have little visibility into the compiler source, it’s beneficial to eliminate any translations between the configuration they edit and the configuration accepted by the compiler. Attaching C1-style ComplationConfig (e.g. Target) directly to IRModule and referencing that as the authority on C1-style config accomplishes that goal.

Representation of Target

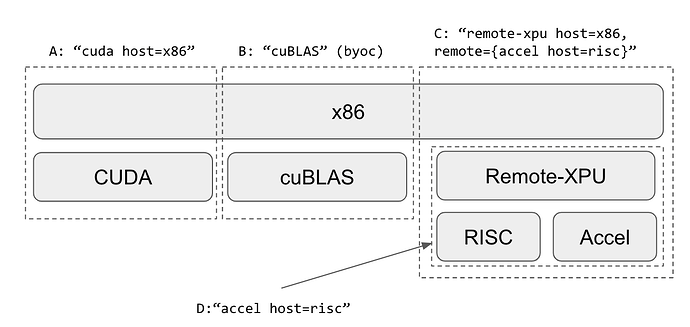

We now turn to the most contentious piece of debate: how should Target be represented? There are two types of Targets considered here:

-

Leaf targets. Identifies a single TVM backend (mapping to a single DLDevice at runtime) which, when used with the broader CompilationConfig, will generate functions which depend only on that device for execution.

-

Composite targets. Identifies a collection of Leaf Targets, one of which is considered the “host” (and therefore, which will host the Executor infrastructure).

Target is typically thought of as a parameter to tvm.relay.build. Currently, when a Leaf Target is passed to tvm.relay.build, it is promoted to a Composite Target with the “host” considered to be the same Leaf Target.

The contentious piece here was how to represent composite targets. Several options were proposed:

D0. Introduce “packaged” Target

This proposal suggests we introduce a new Target type:

{

"kind": "packaged",

"runtime": "crt",

"executor": “...”

"target": {

"kind": "cuda", # the target that TIR generates to

"host": {

"kind": "llvm", # the codegen target for the host-side driver code

...

}

},

}

def tvm.target.packaged(

target="cuda",

executor="aot",

runtime="crt",

): ...

The advantages to this option were:

- It allows reuse of the Target schema infrastructure specified in

src/target/target_kind.cc and friends.

- It requires minimal effort to implement.

- It is polymorphic—any attribute in an IRModule where a Target was required could be either a Leaf Target or a Composite Target. This means that where some flexibility was desired, the compiler could begin with a Composite Target and, via Passes, arrive at a Leaf Target. The example given here was in deciding where a Relay function should run.

- Common needs such as in-memory repr for efforts such as Collage are already implemented.

- No modification to

[tvm.relay.build](http://tvm.relay.build) needed aside from adjustments to [Target.check_and_update_host_consist](https://github.com/apache/tvm/blob/main/python/tvm/target/target.py#L222)

The disadvantages to this option were:

- Polymorphism can lead to confusion. When an attribute exists on a part of an IRModule which could be either Leaf or Composite Target, passes need to add extra logic to determine which kind of target is present. Asserting that an IRModule is well-formed is more difficult and could be a more difficult process for the programmer to understand.

- It is presumed that

tvmc-level configuration could be specified by more than one user. For example, a part of that configuration could be specified by the hardware vendor, and another part could be specified by the tvmc user. While it would be illegal for packaged Target to contain another packaged Target, such rules would need to be enforced by runtime logic rather than the type system. In a situation such as the one just posed, where multiple partial configurations exist and are combined to form a whole, it is vital that the user be able to understand the rules for combining partial configurations. Given the potential for infinite recursion allowed by the type system, those rules become difficult to specify.

D1. Adopt explicit LeafTarget and PackagedTarget classes

In this option, LeafTarget and PackagedTarget are represented by distinct classes which inherit from a common base class e.g. TargetBase. TargetBase is presumed to contain only infrastructure such as schema representation and in-memory repr functionality. It would not be considered to be a valid attribute type in the TVM compilation pass, with one exception: it would be valid for a single component to store TargetBase when:

- It is not attached as

TargetBase to an IRModule seen from another Pass.

- It is convenient for that component to represent a flexible Leaf or Composite Target.

The proposal is sketched below:

class TargetBase:

kind : str

class LeafTarget(Target):

kind: str

host: Optional[LeafTarget]

…

class VirtualDevice:

Target: Optional[LeafTarget]

device_id: int

class PackagedTarget(Target):

target: LeafTarget

host: LeafTarget

executor: Executor

runtime: Runtime

devices: List[VirtualDevice]

The advantages to this option are:

- It allows reuse of the Target schema infrastructure specified in

src/target/target_kind.cc and friends.

- It requires minimal effort to implement.

- It is explicit—there is no confusion between PackagedTarget and LeafTarget where attached to an IRModule.

- Common needs such as in-memory repr for efforts such as Collage are already implemented.

- No modification to

[tvm.relay.build](http://tvm.relay.build) needed aside from adjustments to [Target.check_and_update_host_consist](https://github.com/apache/tvm/blob/main/python/tvm/target/target.py#L222). However, we could modify tvm.relay.build to take PackagedTarget only in a future update.

The disadvantages to this option are:

- The

kind field is present on the base class and could suggest polymorphic use in the code.

- Polymorphic use needs to be disallowed in code review.

D2. Adopt separate PackagedTarget and LeafTargets without any common base class

This option fully separates the PackagedTarget and LeafTarget classes:

class LeafTarget:

host: Optional[LeafTarget]

Target = LeafTarget

class VirtualDevice:

Target: Optional[LeafTarget]

device_id: int

class PackageConfig:

host: LeafTarget

executor: Executor

runtime: Runtime

devices: List[VirtualDevice]

The advantages to this option are:

- It is explicit—there is no confusion between PackagedTarget and LeafTarget where attached to an IRModule.

- The API to

[tvm.relay.build](http://tvm.relay.build) could be made the most specific of all of the options.

The disadvantages to this option are:

- Target schema and

repr infrastructure needs to be re-implemented.

- It requires a big lift that may be difficult/impossible to do in an incremental way.

Decision on Target Representation

We conclude that D1 is the best approach. It has the benefits of explicit typing on IRModule and in flow-level compiler classes while retaining flexibility which could prove useful in implementing future projects which may experiment with composite targets, such as Collage. Collage will discuss these efforts shortly at the TVM Community Meeting and in an RFC.

Example of Partial Configuration

Finally, an example of partial configuration, as it had bearing on the discussion:

my-soc.yaml:

tag: my-soc-base

target:

kind: ethos

memory-size: 128

host:

kind: llvm

mcpu: cortex-m33

runtime:

Kind: c

app.yaml:

executor:

Kind: aot

Our Conclusion

The RFC as proposed should not be in conflict with the consensus we reached. We prefer the implementation of the RFC to re-use the schema and in-memory repr infrastructure developed for Target by adopting a common base class. Only the PackagedTarget from CompilationConfig should be attached to the IRModule, leaving room to add PassContext to CompilationConfig in a future RFC.