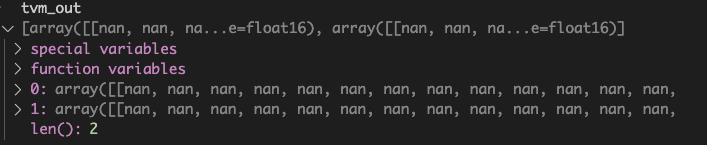

Hi, I noticed that in the mixed precision PR it is mentioned that BERT is tested and verified. However when I applied the ToMixedPrecision PASS on my fp32 mod, the inference result I am getting from the fp16_mod is full of nans. Have anyone ever bump into this problem?

if dtype == "float16":

mod = InferType()(mod)

fp16_mod = ToMixedPrecision(dtype)(mod)