Thanks for your reply.

When n_trial = 100, the error still exists.

When n_trial = len(task.config_space), some new debug information was output.

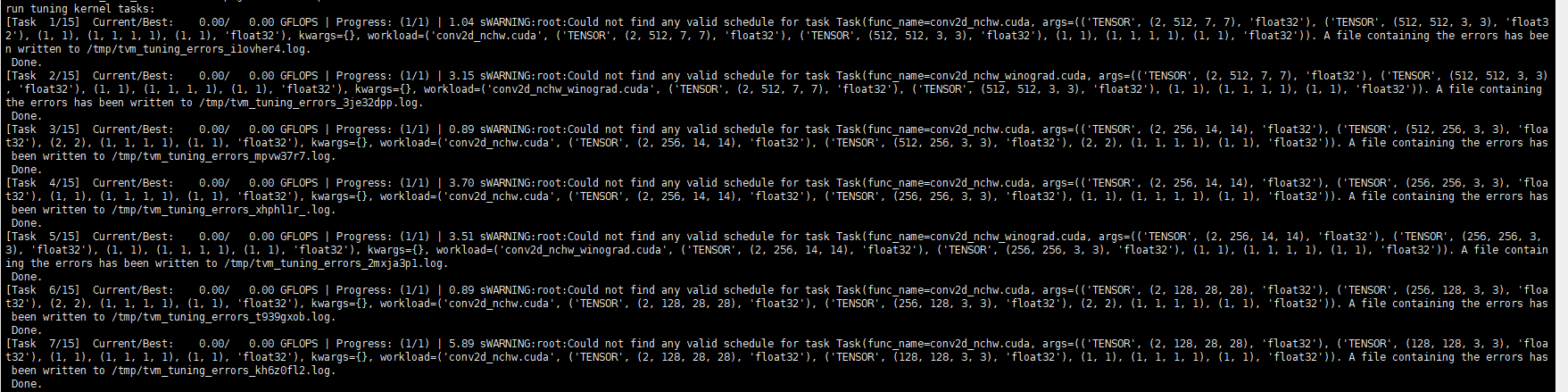

Part of the debug info:

[Task 1 / 15] Current / Best: 0.00 / 0.00 GFLOPS | Progress: (160 / 844800) | 186.98 sWARNING: autotvm: Too many errors happen in the tuning.Now is in debug mode

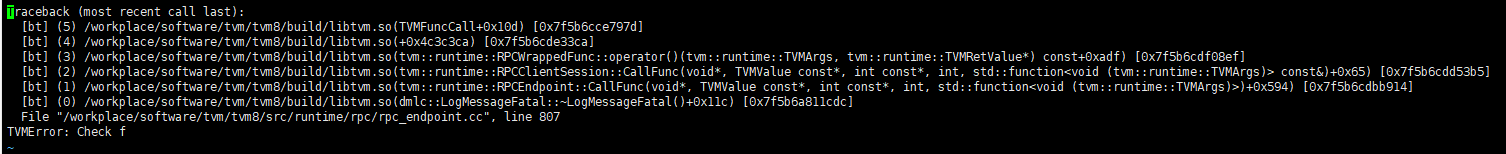

DEBUG: autotvm: No: 161 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (RuntimeError('Traceback (most recent call last):\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4c3c3ca) [0x7fe55a05a3ca]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCWrappedFunc::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const+0xadf) [0x7fe55a0678ef]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCClientSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)+0x65) [0x7fe55a04c3b5]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCEndpoint::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)>)+0x594) [0x7fe55a032914]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(dmlc::LogMessageFatal::~LogMessageFatal()+0x11c) [0x7fe557a88cdc]\n File "/workplace/software/tvm/tvm8/src/runtime/rpc/rpc_endpoint.cc", line 807\nTVMError: Check f'), ), error_no = 4, all_cost = 2.359102964401245, timestamp = 1620783122.138785)[('tile_f', [-1, 2, 1, 16]), ('tile_y', [-1, 7, 1, 1]), ('tile_x', [-1, 1, 7, 1]), ('tile_rc', [-1, 2]), ('tile_ry', [-1, 1]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 1500), ('unroll_explicit', 0)], None, 357665

DEBUG: autotvm: No: 162 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (InstantiationError('Traceback (most recent call last):\n [bt] (8) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (7) /workplace/software/tvm/tvm8/build/libtvm.so(+0x2d3e246) [0x7fe55815c246]\n [bt] (6) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule) const+0x217) [0x7fe557e81bb7]\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::PassNode::operator()(tvm::IRModule) const+0xa0) [0x7fe557e9b460]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x913) [0x7fe5581569a3]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x223) [0x7fe558166ca3]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::tir::transform::PrimFuncPassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x90a) [0x7fe55889daba]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::TVMRetValue tvm::runtime::PackedFunc::operator()<tvm::tir::PrimFunc, tvm::IRModule, tvm::transform::PassContext>(tvm::tir::PrimFunc&&, tvm::IRModule&&, tvm::transform::PassContext&&) const+0x177) [0x7fe5588a48a7]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4b42e6b) [0x7fe559f60e6b]\n File "tvm/_ffi/_cython/./packed_func.pxi", line 55, in tvm._ffi._cy3.core.tvm_callback\n File "/workplace/software/tvm/tvm8/python/tvm/autotvm/measure/measure_methods.py", line 747, in verify_pass\n raise InstantiationError("Skipped because of invalid gpu kernel")\ntvm.autotvm.task.space.InstantiationError: Skipped because of invalid gpu kernel'), ), error_no = 1, all_cost = 0.059586286544799805, timestamp = 1620783111.542556)[('tile_f', [-1, 4, 2, 8]), ('tile_y', [-1, 1, 7, 1]), ('tile_x', [-1, 1, 1, 1]), ('tile_rc', [-1, 128]), ('tile_ry', [-1, 1]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 1500), ('unroll_explicit', 0)], None, 377225

DEBUG: autotvm: No: 163 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (RuntimeError('Traceback (most recent call last):\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4c3c3ca) [0x7fe55a05a3ca]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCWrappedFunc::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const+0xadf) [0x7fe55a0678ef]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCClientSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)+0x65) [0x7fe55a04c3b5]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCEndpoint::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)>)+0x594) [0x7fe55a032914]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(dmlc::LogMessageFatal::~LogMessageFatal()+0x11c) [0x7fe557a88cdc]\n File "/workplace/software/tvm/tvm8/src/runtime/rpc/rpc_endpoint.cc", line 807\nTVMError: Check f'), ), error_no = 4, all_cost = 2.060210943222046, timestamp = 1620783123.3094442)[('tile_f', [-1, 4, 16, 8]), ('tile_y', [-1, 1, 1, 1]), ('tile_x', [-1, 1, 1, 1]), ('tile_rc', [-1, 2]), ('tile_ry', [-1, 1]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 512), ('unroll_explicit', 0)], None, 214880

DEBUG: autotvm: No: 164 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (RuntimeError('Traceback (most recent call last):\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4c3c3ca) [0x7fe55a05a3ca]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCWrappedFunc::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const+0xadf) [0x7fe55a0678ef]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCClientSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)+0x65) [0x7fe55a04c3b5]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCEndpoint::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)>)+0x594) [0x7fe55a032914]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(dmlc::LogMessageFatal::~LogMessageFatal()+0x11c) [0x7fe557a88cdc]\n File "/workplace/software/tvm/tvm8/src/runtime/rpc/rpc_endpoint.cc", line 807\nTVMError: Check f'), ), error_no = 4, all_cost = 1.7798535823822021, timestamp = 1620783124.7946866)[('tile_f', [-1, 1, 8, 16]), ('tile_y', [-1, 1, 1, 1]), ('tile_x', [-1, 1, 1, 7]), ('tile_rc', [-1, 1]), ('tile_ry', [-1, 3]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 0), ('unroll_explicit', 0)], None, 108419

DEBUG: autotvm: No: 165 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (RuntimeError('Traceback (most recent call last):\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4c3c3ca) [0x7fe55a05a3ca]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCWrappedFunc::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const+0xadf) [0x7fe55a0678ef]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCClientSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)+0x65) [0x7fe55a04c3b5]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCEndpoint::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)>)+0x594) [0x7fe55a032914]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(dmlc::LogMessageFatal::~LogMessageFatal()+0x11c) [0x7fe557a88cdc]\n File "/workplace/software/tvm/tvm8/src/runtime/rpc/rpc_endpoint.cc", line 807\nTVMError: Check f'), ), error_no = 4, all_cost = 3.2494418621063232, timestamp = 1620783126.4023278)[('tile_f', [-1, 8, 4, 1]), ('tile_y', [-1, 7, 1, 1]), ('tile_x', [-1, 7, 1, 1]), ('tile_rc', [-1, 64]), ('tile_ry', [-1, 3]), ('tile_rx', [-1, 1]), ('auto_unroll_max_step', 512), ('unroll_explicit', 1)], None, 620642

DEBUG: autotvm: No: 166 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (InstantiationError('Traceback (most recent call last):\n [bt] (8) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (7) /workplace/software/tvm/tvm8/build/libtvm.so(+0x2d3e246) [0x7fe55815c246]\n [bt] (6) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule) const+0x217) [0x7fe557e81bb7]\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::PassNode::operator()(tvm::IRModule) const+0xa0) [0x7fe557e9b460]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x913) [0x7fe5581569a3]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x223) [0x7fe558166ca3]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::tir::transform::PrimFuncPassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x90a) [0x7fe55889daba]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::TVMRetValue tvm::runtime::PackedFunc::operator()<tvm::tir::PrimFunc, tvm::IRModule, tvm::transform::PassContext>(tvm::tir::PrimFunc&&, tvm::IRModule&&, tvm::transform::PassContext&&) const+0x177) [0x7fe5588a48a7]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4b42e6b) [0x7fe559f60e6b]\n File "tvm/_ffi/_cython/./packed_func.pxi", line 55, in tvm._ffi._cy3.core.tvm_callback\n File "/workplace/software/tvm/tvm8/python/tvm/autotvm/measure/measure_methods.py", line 747, in verify_pass\n raise InstantiationError("Skipped because of invalid gpu kernel")\ntvm.autotvm.task.space.InstantiationError: Skipped because of invalid gpu kernel'), ), error_no = 1, all_cost = 0.06744527816772461, timestamp = 1620783112.4252615)[('tile_f', [-1, 1, 16, 32]), ('tile_y', [-1, 1, 1, 7]), ('tile_x', [-1, 1, 1, 1]), ('tile_rc', [-1, 64]), ('tile_ry', [-1, 3]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 512), ('unroll_explicit', 1)], None, 690779

DEBUG: autotvm: No: 167 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (InstantiationError('Traceback (most recent call last):\n [bt] (8) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (7) /workplace/software/tvm/tvm8/build/libtvm.so(+0x2d3e246) [0x7fe55815c246]\n [bt] (6) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule) const+0x217) [0x7fe557e81bb7]\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::PassNode::operator()(tvm::IRModule) const+0xa0) [0x7fe557e9b460]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x913) [0x7fe5581569a3]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x223) [0x7fe558166ca3]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::tir::transform::PrimFuncPassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x90a) [0x7fe55889daba]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::TVMRetValue tvm::runtime::PackedFunc::operator()<tvm::tir::PrimFunc, tvm::IRModule, tvm::transform::PassContext>(tvm::tir::PrimFunc&&, tvm::IRModule&&, tvm::transform::PassContext&&) const+0x177) [0x7fe5588a48a7]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4b42e6b) [0x7fe559f60e6b]\n File "tvm/_ffi/_cython/./packed_func.pxi", line 55, in tvm._ffi._cy3.core.tvm_callback\n File "/workplace/software/tvm/tvm8/python/tvm/autotvm/measure/measure_methods.py", line 747, in verify_pass\n raise InstantiationError("Skipped because of invalid gpu kernel")\ntvm.autotvm.task.space.InstantiationError: Skipped because of invalid gpu kernel'), ), error_no = 1, all_cost = 0.0637214183807373, timestamp = 1620783112.4254885)[('tile_f', [-1, 32, 4, 1]), ('tile_y', [-1, 1, 7, 1]), ('tile_x', [-1, 7, 1, 1]), ('tile_rc', [-1, 256]), ('tile_ry', [-1, 1]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 0), ('unroll_explicit', 0)], None, 99904

DEBUG: autotvm: No: 168 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (InstantiationError('Traceback (most recent call last):\n [bt] (8) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (7) /workplace/software/tvm/tvm8/build/libtvm.so(+0x2d3e246) [0x7fe55815c246]\n [bt] (6) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule) const+0x217) [0x7fe557e81bb7]\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::PassNode::operator()(tvm::IRModule) const+0xa0) [0x7fe557e9b460]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x913) [0x7fe5581569a3]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x223) [0x7fe558166ca3]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::tir::transform::PrimFuncPassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x90a) [0x7fe55889daba]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::TVMRetValue tvm::runtime::PackedFunc::operator()<tvm::tir::PrimFunc, tvm::IRModule, tvm::transform::PassContext>(tvm::tir::PrimFunc&&, tvm::IRModule&&, tvm::transform::PassContext&&) const+0x177) [0x7fe5588a48a7]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4b42e6b) [0x7fe559f60e6b]\n File "tvm/_ffi/_cython/./packed_func.pxi", line 55, in tvm._ffi._cy3.core.tvm_callback\n File "/workplace/software/tvm/tvm8/python/tvm/autotvm/measure/measure_methods.py", line 747, in verify_pass\n raise InstantiationError("Skipped because of invalid gpu kernel")\ntvm.autotvm.task.space.InstantiationError: Skipped because of invalid gpu kernel'), ), error_no = 1, all_cost = 0.0635826587677002, timestamp = 1620783112.4256418)[('tile_f', [-1, 8, 2, 16]), ('tile_y', [-1, 1, 7, 1]), ('tile_x', [-1, 1, 1, 7]), ('tile_rc', [-1, 256]), ('tile_ry', [-1, 3]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 512), ('unroll_explicit', 1)], None, 700213

DEBUG: autotvm: No: 169 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (InstantiationError('Traceback (most recent call last):\n [bt] (8) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (7) /workplace/software/tvm/tvm8/build/libtvm.so(+0x2d3e246) [0x7fe55815c246]\n [bt] (6) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule) const+0x217) [0x7fe557e81bb7]\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::PassNode::operator()(tvm::IRModule) const+0xa0) [0x7fe557e9b460]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x913) [0x7fe5581569a3]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x223) [0x7fe558166ca3]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::tir::transform::PrimFuncPassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x90a) [0x7fe55889daba]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::TVMRetValue tvm::runtime::PackedFunc::operator()<tvm::tir::PrimFunc, tvm::IRModule, tvm::transform::PassContext>(tvm::tir::PrimFunc&&, tvm::IRModule&&, tvm::transform::PassContext&&) const+0x177) [0x7fe5588a48a7]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4b42e6b) [0x7fe559f60e6b]\n File "tvm/_ffi/_cython/./packed_func.pxi", line 55, in tvm._ffi._cy3.core.tvm_callback\n File "/workplace/software/tvm/tvm8/python/tvm/autotvm/measure/measure_methods.py", line 747, in verify_pass\n raise InstantiationError("Skipped because of invalid gpu kernel")\ntvm.autotvm.task.space.InstantiationError: Skipped because of invalid gpu kernel'), ), error_no = 1, all_cost = 0.08813166618347168, timestamp = 1620783112.4258099)[('tile_f', [-1, 32, 4, 1]), ('tile_y', [-1, 1, 1, 1]), ('tile_x', [-1, 7, 1, 1]), ('tile_rc', [-1, 256]), ('tile_ry', [-1, 1]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 512), ('unroll_explicit', 1)], None, 662664

DEBUG: autotvm: No: 170 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (RuntimeError('Traceback (most recent call last):\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4c3c3ca) [0x7fe55a05a3ca]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCWrappedFunc::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const+0xadf) [0x7fe55a0678ef]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCClientSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)+0x65) [0x7fe55a04c3b5]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCEndpoint::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)>)+0x594) [0x7fe55a032914]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(dmlc::LogMessageFatal::~LogMessageFatal()+0x11c) [0x7fe557a88cdc]\n File "/workplace/software/tvm/tvm8/src/runtime/rpc/rpc_endpoint.cc", line 807\nTVMError: Check f'), ), error_no = 4, all_cost = 1.749293327331543, timestamp = 1620783127.9215028)[('tile_f', [-1, 2, 2, 4]), ('tile_y', [-1, 1, 1, 1]), ('tile_x', [-1, 1, 1, 1]), ('tile_rc', [-1, 128]), ('tile_ry', [-1, 3]), ('tile_rx', [-1, 1]), ('auto_unroll_max_step', 0), ('unroll_explicit', 1)], None, 482349

DEBUG: autotvm: No: 171 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (InstantiationError('Traceback (most recent call last):\n [bt] (8) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (7) /workplace/software/tvm/tvm8/build/libtvm.so(+0x2d3e246) [0x7fe55815c246]\n [bt] (6) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule) const+0x217) [0x7fe557e81bb7]\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::PassNode::operator()(tvm::IRModule) const+0xa0) [0x7fe557e9b460]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x913) [0x7fe5581569a3]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x223) [0x7fe558166ca3]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::tir::transform::PrimFuncPassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x90a) [0x7fe55889daba]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::TVMRetValue tvm::runtime::PackedFunc::operator()<tvm::tir::PrimFunc, tvm::IRModule, tvm::transform::PassContext>(tvm::tir::PrimFunc&&, tvm::IRModule&&, tvm::transform::PassContext&&) const+0x177) [0x7fe5588a48a7]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4b42e6b) [0x7fe559f60e6b]\n File "tvm/_ffi/_cython/./packed_func.pxi", line 55, in tvm._ffi._cy3.core.tvm_callback\n File "/workplace/software/tvm/tvm8/python/tvm/autotvm/measure/measure_methods.py", line 747, in verify_pass\n raise InstantiationError("Skipped because of invalid gpu kernel")\ntvm.autotvm.task.space.InstantiationError: Skipped because of invalid gpu kernel'), ), error_no = 1, all_cost = 0.05807065963745117, timestamp = 1620783112.4261537)[('tile_f', [-1, 8, 8, 8]), ('tile_y', [-1, 1, 1, 1]), ('tile_x', [-1, 1, 7, 1]), ('tile_rc', [-1, 32]), ('tile_ry', [-1, 1]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 0), ('unroll_explicit', 0)], None, 89917

DEBUG: autotvm: No: 172 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (InstantiationError('Traceback (most recent call last):\n [bt] (8) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (7) /workplace/software/tvm/tvm8/build/libtvm.so(+0x2d3e246) [0x7fe55815c246]\n [bt] (6) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule) const+0x217) [0x7fe557e81bb7]\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::PassNode::operator()(tvm::IRModule) const+0xa0) [0x7fe557e9b460]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x913) [0x7fe5581569a3]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x223) [0x7fe558166ca3]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::tir::transform::PrimFuncPassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x90a) [0x7fe55889daba]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::TVMRetValue tvm::runtime::PackedFunc::operator()<tvm::tir::PrimFunc, tvm::IRModule, tvm::transform::PassContext>(tvm::tir::PrimFunc&&, tvm::IRModule&&, tvm::transform::PassContext&&) const+0x177) [0x7fe5588a48a7]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4b42e6b) [0x7fe559f60e6b]\n File "tvm/_ffi/_cython/./packed_func.pxi", line 55, in tvm._ffi._cy3.core.tvm_callback\n File "/workplace/software/tvm/tvm8/python/tvm/autotvm/measure/measure_methods.py", line 747, in verify_pass\n raise InstantiationError("Skipped because of invalid gpu kernel")\ntvm.autotvm.task.space.InstantiationError: Skipped because of invalid gpu kernel'), ), error_no = 1, all_cost = 0.15351152420043945, timestamp = 1620783112.4263077)[('tile_f', [-1, 64, 1, 4]), ('tile_y', [-1, 7, 1, 1]), ('tile_x', [-1, 1, 1, 7]), ('tile_rc', [-1, 16]), ('tile_ry', [-1, 1]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 1500), ('unroll_explicit', 1)], None, 791446

DEBUG: autotvm: No: 173 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (RuntimeError('Traceback (most recent call last):\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4c3c3ca) [0x7fe55a05a3ca]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCWrappedFunc::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const+0xadf) [0x7fe55a0678ef]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCClientSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)+0x65) [0x7fe55a04c3b5]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCEndpoint::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)>)+0x594) [0x7fe55a032914]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(dmlc::LogMessageFatal::~LogMessageFatal()+0x11c) [0x7fe557a88cdc]\n File "/workplace/software/tvm/tvm8/src/runtime/rpc/rpc_endpoint.cc", line 807\nTVMError: Check f'), ), error_no = 4, all_cost = 1.7525591850280762, timestamp = 1620783129.3650928)[('tile_f', [-1, 2, 32, 1]), ('tile_y', [-1, 1, 1, 7]), ('tile_x', [-1, 1, 1, 7]), ('tile_rc', [-1, 1]), ('tile_ry', [-1, 1]), ('tile_rx', [-1, 1]), ('auto_unroll_max_step', 0), ('unroll_explicit', 0)], None, 3341

DEBUG: autotvm: No: 174 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (InstantiationError('Traceback (most recent call last):\n [bt] (8) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (7) /workplace/software/tvm/tvm8/build/libtvm.so(+0x2d3e246) [0x7fe55815c246]\n [bt] (6) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule) const+0x217) [0x7fe557e81bb7]\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::PassNode::operator()(tvm::IRModule) const+0xa0) [0x7fe557e9b460]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x913) [0x7fe5581569a3]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x223) [0x7fe558166ca3]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::tir::transform::PrimFuncPassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x90a) [0x7fe55889daba]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::TVMRetValue tvm::runtime::PackedFunc::operator()<tvm::tir::PrimFunc, tvm::IRModule, tvm::transform::PassContext>(tvm::tir::PrimFunc&&, tvm::IRModule&&, tvm::transform::PassContext&&) const+0x177) [0x7fe5588a48a7]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4b42e6b) [0x7fe559f60e6b]\n File "tvm/_ffi/_cython/./packed_func.pxi", line 55, in tvm._ffi._cy3.core.tvm_callback\n File "/workplace/software/tvm/tvm8/python/tvm/autotvm/measure/measure_methods.py", line 747, in verify_pass\n raise InstantiationError("Skipped because of invalid gpu kernel")\ntvm.autotvm.task.space.InstantiationError: Skipped because of invalid gpu kernel'), ), error_no = 1, all_cost = 0.07019519805908203, timestamp = 1620783112.4266315)[('tile_f', [-1, 1, 1, 256]), ('tile_y', [-1, 1, 1, 1]), ('tile_x', [-1, 7, 1, 1]), ('tile_rc', [-1, 512]), ('tile_ry', [-1, 3]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 512), ('unroll_explicit', 1)], None, 701576

DEBUG: autotvm: No: 175 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (InstantiationError('Traceback (most recent call last):\n [bt] (8) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (7) /workplace/software/tvm/tvm8/build/libtvm.so(+0x2d3e246) [0x7fe55815c246]\n [bt] (6) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule) const+0x217) [0x7fe557e81bb7]\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::PassNode::operator()(tvm::IRModule) const+0xa0) [0x7fe557e9b460]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x913) [0x7fe5581569a3]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x223) [0x7fe558166ca3]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::tir::transform::PrimFuncPassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x90a) [0x7fe55889daba]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::TVMRetValue tvm::runtime::PackedFunc::operator()<tvm::tir::PrimFunc, tvm::IRModule, tvm::transform::PassContext>(tvm::tir::PrimFunc&&, tvm::IRModule&&, tvm::transform::PassContext&&) const+0x177) [0x7fe5588a48a7]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4b42e6b) [0x7fe559f60e6b]\n File "tvm/_ffi/_cython/./packed_func.pxi", line 55, in tvm._ffi._cy3.core.tvm_callback\n File "/workplace/software/tvm/tvm8/python/tvm/autotvm/measure/measure_methods.py", line 747, in verify_pass\n raise InstantiationError("Skipped because of invalid gpu kernel")\ntvm.autotvm.task.space.InstantiationError: Skipped because of invalid gpu kernel'), ), error_no = 1, all_cost = 0.05889439582824707, timestamp = 1620783112.4268005)[('tile_f', [-1, 16, 2, 16]), ('tile_y', [-1, 1, 7, 1]), ('tile_x', [-1, 7, 1, 1]), ('tile_rc', [-1, 512]), ('tile_ry', [-1, 3]), ('tile_rx', [-1, 1]), ('auto_unroll_max_step', 1500), ('unroll_explicit', 1)], None, 772374

DEBUG: autotvm: No: 176 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (InstantiationError('Traceback (most recent call last):\n [bt] (8) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (7) /workplace/software/tvm/tvm8/build/libtvm.so(+0x2d3e246) [0x7fe55815c246]\n [bt] (6) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule) const+0x217) [0x7fe557e81bb7]\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::PassNode::operator()(tvm::IRModule) const+0xa0) [0x7fe557e9b460]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::SequentialNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x913) [0x7fe5581569a3]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::transform::Pass::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x223) [0x7fe558166ca3]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::tir::transform::PrimFuncPassNode::operator()(tvm::IRModule, tvm::transform::PassContext const&) const+0x90a) [0x7fe55889daba]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::TVMRetValue tvm::runtime::PackedFunc::operator()<tvm::tir::PrimFunc, tvm::IRModule, tvm::transform::PassContext>(tvm::tir::PrimFunc&&, tvm::IRModule&&, tvm::transform::PassContext&&) const+0x177) [0x7fe5588a48a7]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4b42e6b) [0x7fe559f60e6b]\n File "tvm/_ffi/_cython/./packed_func.pxi", line 55, in tvm._ffi._cy3.core.tvm_callback\n File "/workplace/software/tvm/tvm8/python/tvm/autotvm/measure/measure_methods.py", line 747, in verify_pass\n raise InstantiationError("Skipped because of invalid gpu kernel")\ntvm.autotvm.task.space.InstantiationError: Skipped because of invalid gpu kernel'), ), error_no = 1, all_cost = 0.05487465858459473, timestamp = 1620783112.4269478)[('tile_f', [-1, 2, 1, 128]), ('tile_y', [-1, 1, 7, 1]), ('tile_x', [-1, 7, 1, 1]), ('tile_rc', [-1, 16]), ('tile_ry', [-1, 3]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 1500), ('unroll_explicit', 0)], None, 402811

DEBUG: autotvm: No: 177 GFLOPS: 0.00 / 0.00 result: MeasureResult(costs = (RuntimeError('Traceback (most recent call last):\n [bt] (5) /workplace/software/tvm/tvm8/build/libtvm.so(TVMFuncCall+0x10d) [0x7fe559f5e97d]\n [bt] (4) /workplace/software/tvm/tvm8/build/libtvm.so(+0x4c3c3ca) [0x7fe55a05a3ca]\n [bt] (3) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCWrappedFunc::operator()(tvm::runtime::TVMArgs, tvm::runtime::TVMRetValue*) const+0xadf) [0x7fe55a0678ef]\n [bt] (2) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCClientSession::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)> const&)+0x65) [0x7fe55a04c3b5]\n [bt] (1) /workplace/software/tvm/tvm8/build/libtvm.so(tvm::runtime::RPCEndpoint::CallFunc(void*, TVMValue const*, int const*, int, std::function<void (tvm::runtime::TVMArgs)>)+0x594) [0x7fe55a032914]\n [bt] (0) /workplace/software/tvm/tvm8/build/libtvm.so(dmlc::LogMessageFatal::~LogMessageFatal()+0x11c) [0x7fe557a88cdc]\n File "/workplace/software/tvm/tvm8/src/runtime/rpc/rpc_endpoint.cc", line 807\nTVMError: Check f'), ), error_no = 4, all_cost = 1.8380303382873535, timestamp = 1620783130.8207612)[('tile_f', [-1, 2, 1, 16]), ('tile_y', [-1, 1, 7, 1]), ('tile_x', [-1, 1, 7, 1]), ('tile_rc', [-1, 1]), ('tile_ry', [-1, 1]), ('tile_rx', [-1, 3]), ('auto_unroll_max_step', 512), ('unroll_explicit', 0)], None, 213565

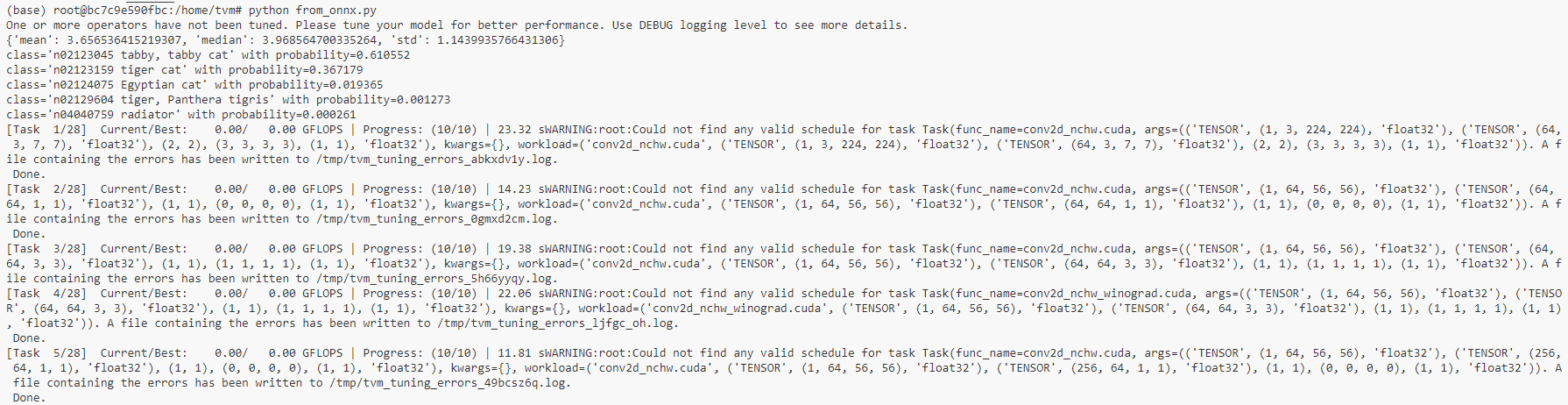

The error may be related to the information from the above text: Skipped because of invalid gpu kernel. But I can not understand it.

GPU: GeForce GTX 1080 Ti