Hi,

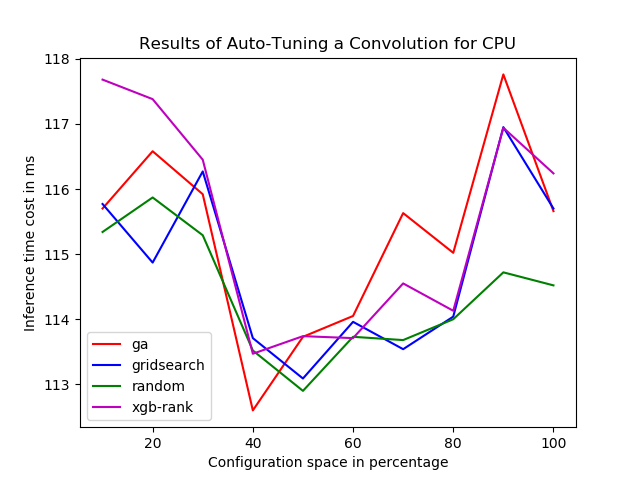

I had tried to compare different tuning parameters in TVM autotuning, Have also found the results of mentioned in the graph below. But for my understanding of auto-tuning when we increase the configuration space the tuning may consume less time, but with my results. I am not able to understand the behavior followed in auto-tuning, which in my case its increasing the inference time too according to Configuration space.

so please correct me if I am wrong in understanding these.

Also any documents to understand more about auto-tuning ??